In the late 1970s and early 1980s, desktop computer operating systems had very basic requirements by today’s standards. Operating systems like CP/M and MS DOS, were limited to one user at a time who could only execute one program at a time.

Only when the currently running program finished executing or was terminated by the user, could another program be run.

[lwptoc]

Typical microprocessors of that time, such as the Intel 8080 and 8088, Zilog Z80, Motorola 6800, MOS Technology 6502, had a single 8-bit core, could address 64 kB of 8-bit memory over a 16-bit address bus, and ran at clock frequencies of 1 to 4 MHz.

They operated by fetching an instruction from memory, executing it, then fetching the next instruction, executing it, and so on. For CP/M and MS DOS, this was perfectly adequate.

Another, contemporary operating system was UNIX. Unlike CP/M or MS DOS, this was a multi-user, multi-tasking operating system, meaning that several users could be using the system at the same time, and each user could be running one or more programs at the same time.

So how did UNIX execute several programs from several users simultaneously, with a single core microprocessor? The operating system’s kernel ran a piece of code called a process scheduler, to very rapidly switch between users and their processes, allocating each a slice of microprocessor time.

In other words, the microprocessor and computer resources were time shared or time multiplexed between processes. This task or context switch happened thousands of times per second and gave the illusion that each user had exclusive use of the system.

Today’s microprocessor’s, more commonly called CPUs (Central Processing Units), have multiple 64-bit cores, can address 16 GB, 32 GB or more memory, and operate at frequencies of 2 GHz to 4 Ghz.

In order to fit multiple cores in a single package, and as CPUs approach 7nm production, chip designers use some extremely sophisticated techniques that are meeting physical limits with the 193nm immersion lithography manufacturing process.

Chip designers therefore, are always looking for methods to better or more efficiently utilize resources and offer greater processing power. Apart from multiple cores, many CPUs now also incorporate a feature called Simultaneous Multi-Threading.

What is a Core

In simple terms, a core is a single processing unit (with its own control unit, arithmetic logic unit or ALU, registers, cache memory), capable of independently executing instructions in a computational task. Executing an instruction involves a three phased cycle of fetch, decode and execute, commonly knows as the instruction cycle.

With older CPUs, the full cycle was completed before the next instruction was fetched and the cycle repeated. Modern CPUs however, use instruction pipelining, which allows the processor to fetch the next instruction while the current instruction is still being processed.

Over the generations of CPU development, increased CPU power had been a matter of increasing a CPU’s operating frequency, so that more instructions could be executed per second.

However, technical and physical constraints, such as heat dissipation and a phenomenon called electron tunneling, have meant that until some radical breakthrough is made, the maximum operating frequency is fast being approached.

In order to pack more processing power, chip designers have for quite some time, been incorporating more than one processing unit or core, in a single CPU package. Each core can execute instructions independently of and simultaneously with the other cores in the package.

The first multi-core CPU, the IBM POWER4 dual-core, was released in 2001. Since then, other manufacturers like Intel, AMD, Nvidia (multi-core GPUs), Sun Microsystems, and Texas Instruments (multi-core DSP) have also introduced multi-core processors.

What is a Thread

In software, a thread is a single chain of instructions or code, that can execute independently of, and concurrently with, other sections of code. A thread has its own program counter, stack, and set of registers.

When referring to threads in the context of processor cores, what is usually intended is how threads are handled by a CPUs Simultaneous Multi-Threading (SMT) feature.

SMT is a multi-threading implementation at the hardware level, which many of today’s processors support, including the IBM z13 and POWER5, AMD Ryzen Threadripprer, and Intel Xeon Phi and i7 (although Intel has dropped the feature from its i7-9700K).

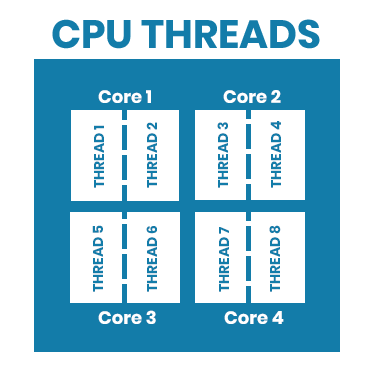

Intel’s implementation of SMT is more commonly known as Hyper-Threading Technology or HTT. A core can have up to two threads, and allows a physical processor core to be perceived as two, distinct logical or virtual processor cores by the operating system.

According to Intel, hyper-threading results in a 30% increase in performance and speed, while for the AMD Ryzen 5 1600, according to one study, SMT gives a 17% increase in processor performance.

Applications take advantage of simultaneous multi-threading through the operating system. Traditional applications had only one thread of execution. If the program was blocked waiting for an I/O operation to complete, the entire application was held up.

Multi-threaded applications on the other hand, have many threads within a single process. If one thread is blocked waiting for an I/O operation, other threads are executed.

A typical example is a word processor, which has to processes keyboard input, perform spelling checks, and auto-save the document. In a single threaded application, each of these tasks would be performed in series, possibly resulting in momentary unresponsiveness from the keyboard while the auto-save function completes.

In a multi-threaded application, they could be performed in parallel where a delay in one task, does not hinder or impact on the other tasks.

Final Thoughts

With today’s operating systems, which are usually called upon to run several applications simultaneously, single core CPUs would make the system very sluggish as the operating system task-switched between processes, allocating each its share of CPU time.

In contrast, multi-core CPUs allow an operating system to run multiple applications in parallel and independently of each other. Task switching can still take place, but the load is shared, and running tasks in parallel equates to more processing power.

Multi-core CPUs also allow a single, processing-intensive application to share its sub-tasks or processes across multiple cores, executing these processes in parallel and hence increasing processing throughput and minimizing execution times.

Simultaneous Multi-Threading allows a core to deal with two software threads in tandem, increasing throughput, although not as much as with multi-cores. The difference between cores and threads, is that each core has its own resources with exclusive use of those resources, whereas threads share a core’s resources.

Benefits of multi-threading include:

- Responsiveness – if one thread blocks waiting for a hardware request to return a result or data, another thread can utilize this otherwise idle time, to complete other tasks

- Resource sharing – threads share data and other resources, and multiple tasks can be executed simultaneously in a common address space

- Efficiency – thread creation, management, and context switching between threads, is much more efficient than performing the same tasks for processes

- Scalability – a single threaded application can only run on a single core, even if more cores are available. Multi-threaded applications however, may run concurrently on one core or be split amongst multiple cores

Since threads share resources, one disadvantage with multi-threading occurs when threads contend for the same resources, creating bottlenecks, deadlocks, or race conditions. This can result in no gain whatsoever, and in some cases may even see a degradation in performance.

Finally, since threads are executed under the control of the core’s SMT mechanism, there is no guarantee that a thread will be executed under the same conditions every time. This makes threaded code non-deterministic and oftentimes difficult to write and test.